kubeadm安装K8S集群

前置准备

- CentOS * 3

- 网络策略互通

- 互联网访问开通

- 节点唯一主机名、mac

yum 配置

yum

curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repodocker

k8s依赖容器运行时,v1.24 弃用 dockershim,可直接使用使用containerd

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repokubernetes

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOFyum 缓存

yum clean all && yum makecache安装工具

yum -y install tree vim wget bash-completion bash-completion-extras net-tools unzip nc nmap telnet ntpdate时间设置

timedatectl set-timezone Asia/Shanghai

(echo "0 01 23 * * ntpdate ntp1.aliyun.com" ; crontab -l )| crontab防火墙 swap selinux

## 如果防火墙是running,关闭防火墙

sudo systemctl disable firewalld && systemctl stop firewalld

# 关闭SELinux

sudo setenforce 0

sudo sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

# 关闭swap。避免 kubelet启动失败

sudo swapoff -a

# 永久关闭swap

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstabipvs

# 加载ipvs模块

modprobe br_netfilter

modprobe -- ip_vs

modprobe -- ip_vs_sh

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- nf_conntrack_ipv4

# 验证ip_vs模块

lsmod |grep ip_vs

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs_sh 12688 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

# 内核文件

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

# 生效并验证内核优化

sysctl -p /etc/sysctl.d/k8s.conf安装docker

或安装containerd。关注配置 /etc/containerd/config.toml

yum install docker-ce -y配置docker

cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": [

"https://registry.hub.docker.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn",

"https://registry.docker-cn.com"

],

# "exec-opts": ["native.cgroupdriver=systemd"],

"data-root": "/data/docker",

"log-driver": "json-file",

"log-opts": {"max-size":"10m", "max-file":"3"}

}

EOFKubernetes 推荐使用 systemd 来替代 cgroupfs,是因为 systemd 是 Kubernetes 自带的 cgroup 管理器,负责为每个进程分配 cgroupfs,但 Docker 的 cgroup driver 默认是 cgroupfs,这样就同时运行了两个 cgroup 控制管理器。当资源有压力时,有可能会出现不稳定的情况。

如果不修改配置,会在 kubeadm init 时提示:

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/启动

systemctl start docker && systemctl enable docker验证

docker run hello-worldpause 镜像地址更换

为了防止安装过程中出现pause镜像下载失败的问题,建议运行containerd config dump > /etc/containerd/config.toml 命令,将当前配置导出到文件,并修改sandbox_image配置。

## 修改配置文件/etc/containerd/config.toml, 更改sandbox_image配置

[plugins]

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

## 如果没有/etc/containerd/config.toml文件,将默认配置导出到/etc/containerd/config.toml。

containerd config default > /etc/containerd/config.toml

## 重启containerd

systemctl restart containerd安装kubeadm, kubelet, kubectl

kubeadm:部署k8s集群的工具

kubelet: 该组件在集群中的所有机器上运行,并执行启动pod和容器之类的任务。

kubectl: 与集群通信的工具。可以只在master节点上安装

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes开机启动

systemctl enable kubelet.service安装K8S

kubeadm init \

--apiserver-advertise-address=192.168.2.133 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.28.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16安装成功提示

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.133:6443 --token dm4r9m.xjlx0z6e4w54uxze \

--discovery-token-ca-cert-hash sha256:7c12c7c06ea525e6a16fc8b7f62416af11ed36d266eea348606fbbc49f6cd1f3

[root@zili01 install_k8s]#根据提示执行

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

我使用的roor用户,所以执行:

export KUBECONFIG=/etc/kubernetes/admin.conf节点添加, init成功后,会有此提示

kubeadm join 192.168.2.133:6443 --token dm4r9m.xjlx0z6e4w54uxze \

--discovery-token-ca-cert-hash sha256:7c12c7c06ea525e6a16fc8b7f62416af11ed36d266eea348606fbbc49f6cd1f3检查node, 未安装网络插件CNI,所以NotReady

[root@zili01 ~]# kubectl get node -A

NAME STATUS ROLES AGE VERSION

zili01 NotReady control-plane 23h v1.28.2

zili02 NotReady <none> 23h v1.28.2

zili03 NotReady <none> 23h v1.28.2安装网络插件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml修改文件中,net-conf.json.Network, 与init时指定的--pod-network-cidr=10.244.0.0/16 一致

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}部署插件

[root@zili01 install_k8s]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created检查

[root@zili01 install_k8s]# kubectl -n kube-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-66f779496c-hntt2 1/1 Running 0 23h 10.244.1.2 zili02 <none> <none>

coredns-66f779496c-n549l 1/1 Running 0 23h 10.244.1.3 zili02 <none> <none>

etcd-zili01 1/1 Running 0 23h 192.168.2.133 zili01 <none> <none>

kube-apiserver-zili01 1/1 Running 0 23h 192.168.2.133 zili01 <none> <none>

kube-controller-manager-zili01 1/1 Running 1 23h 192.168.2.133 zili01 <none> <none>

kube-proxy-hfjc4 1/1 Running 0 23h 192.168.2.134 zili02 <none> <none>

kube-proxy-kpkf7 1/1 Running 0 23h 192.168.2.135 zili03 <none> <none>

kube-proxy-xwpqj 1/1 Running 0 23h 192.168.2.133 zili01 <none> <none>

kube-scheduler-zili01 1/1 Running 1 23h 192.168.2.133 zili01 <none> <none>

[root@zili01 install_k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

zili01 Ready control-plane 23h v1.28.2

zili02 Ready <none> 23h v1.28.2

zili03 Ready <none> 23h v1.28.2

[root@zili01 install_k8s]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@zili01 install_k8s]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@zili01 install_k8s]# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d15h

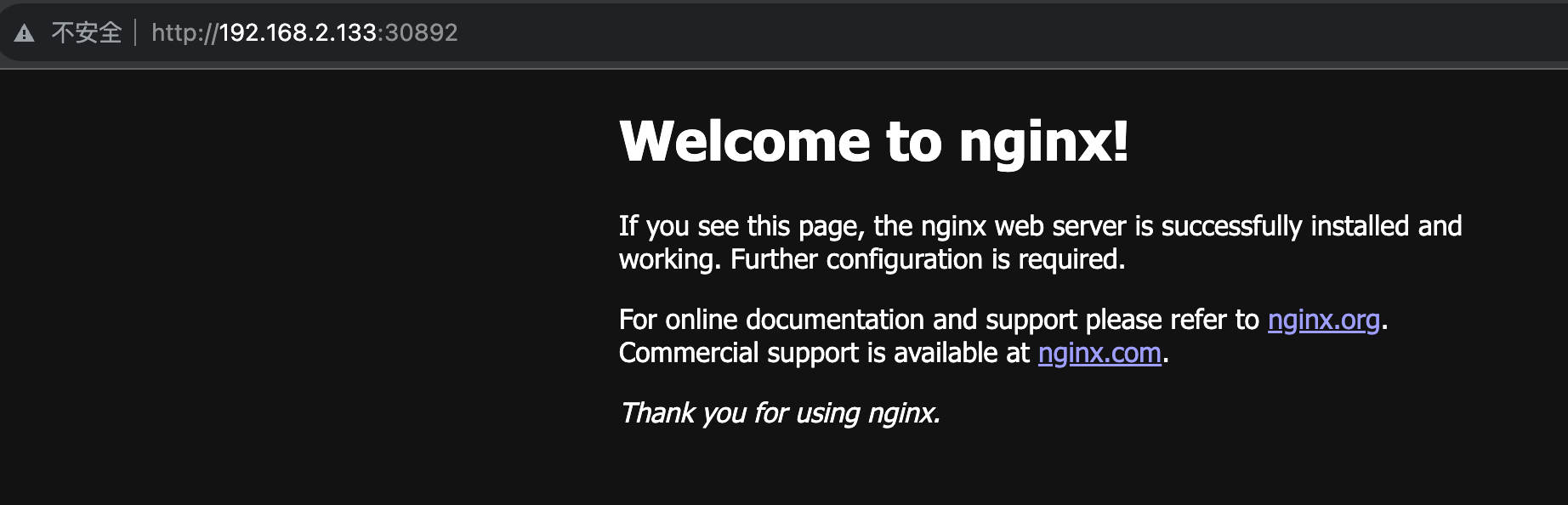

default nginx NodePort 10.96.30.102 <none> 80:30892/TCP 3s

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d15h访问 节点地址 + 端口

其他

#生成一条永久有效的token

kubeadm token create --ttl 0

#查询token

kubeadm token list

#获取ca证书sha256编码hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'污点删除

查看污点

[root@zili01 install_k8s]# kubectl describe nodes |grep Taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

Taints: <none>

Taints: <none>master节点,默认不参与调度的,可通过污点删除,让master参与调度

kubectl taint nodes --all node-role.kubernetes.io/control-plane-不建议删除。建议master 节点配置降低,和node分开部署,各司其职